I've taken some publicly available fonts and extracted glyphs from them to make a

dataset similar to MNIST. There are 10 classes, with letters A-J taken from different fonts.

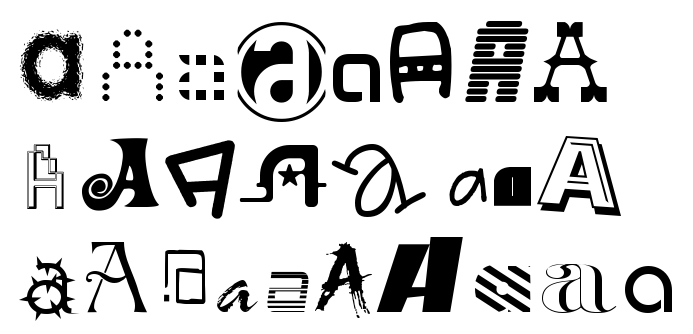

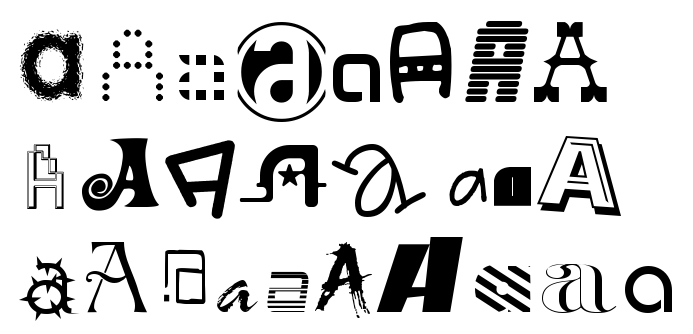

Here are some examples of letter "A"

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

load('notMNIST_small.mat')

for i=1:5

figure('Name',num2str(labels(i))),imshow(images(:,:,i)/255)

end

Zipped version is just a set of png images grouped by class. You can turn zipped version of dataset into Matlab version as follows

tar -xzf notMNIST_large.tar.gz

python matlab_convert.py notMNIST_large notMNIST_large.mat

Approaching 0.5% error rate on notMNIST_small would be very impressive. If you run your algorithm on this dataset, please let me know your results.

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

629 comments:

1 – 200 of 629 Newer› Newest»What does your baseline get on the negated version of the dataset? In other words, make the "ink" pixels have intensity 1 and the non-ink pixels have intensity zero. I would be curious to know if your baseline does better on one version or the other.

I don't expect it to make any difference -- pixel level features are learned by stacked autoencoder, and there's nothing biasing to learner to prefer 0's or 1's to start with

It makes a difference on MNIST, which is why I asked.

WE can use this data even if we do research on this?for instance if we obtain relatively good results

or propose something novel are we allowed to publish anything on it?

regards

ML_random_guy

ML_random_guy -- that depends on whether your country has laws against publishing

Hello, it's nice to have such a new challenging dataset. Do you recommend a specific evaluation protocol (number of training/test images) ? Otherwise people will work on different subsets and results will not be directly comparable.

Train on the whole "dirty" dataset, evaluate on the whole "clean" dataset.

This is a better indicator of real-life performance of a system than traditional 60/30 split because there is often a ton of low-quality ground truth and small amount of high quality ground truth. For this task, I can get millions, possibly billions of distinct digital glyph images with 5-10% labels wrong, but I'm stuck with small amount of near perfectly labeled glyphs

Thanks for the protocol info. Would it be possible to get a tar archive with PNG images of the small dataset like the huge one ? I'm not using Matlab.

Oops, small tar should've been in the directory to start with, fixed

I used this dataset to test some of my code and got about 3.8% error rate. Are there more results known for this dataset? A few lines of text are here.

Hey, that's pretty impressive! This is the highest accuracy I know. I'm working on a larger dataset to release publicly, but slowed down by some legal clearance hurdles

How do you do finetuning? Hinton's contrastive wake-sleep?

What is unicode370k.tar.gz?

It's a bunch of characters taken from the tail end of unicode values

http://yaroslavvb.blogspot.com/2011/11/shapecatcher.html

How did you split your dataset into train,valid,test to get 89%?

Hi, myself and Zhen Zhou from the LISA lab at Université de Montréal trained a couple of 4 layer MLPs with 1024-300-50 hidden neurons respectively. We divided the noisy set into 5/6 train 1/6 valid and kept the clean set for testing. We 97.1% accuracy on the test set at 412 epoch with early stopping, linear decay of the learning rate, a hard constraint on the norm of the weights and tanh activation units. We get approximately 93 on valid and 98 on train. The train set is easy to overfit (you can get 100% accuracy on train if you continue training). One could probably do better if they pursue hyper-optimization further. We used Torch 7.

I got with a simple neural network (784,1024,10), whereas the activation functions where RELU and then just a normal softmax. Without activation decay, pre stop, dropout & co and 3001 iterations and a batch size of 128, I got 89.3% accuracy on the test set.

Step: 3000

Minibatch accuracy: 86.7%

Validation accuracy: 82.6%

Finish (after Step 3001):

Test accuracy: 89.3%

Minibatch loss at step 3000: 55.872269

Minibatch accuracy: 79.7%

Validation accuracy: 84.4%

Test accuracy: 90.6%

With a neural network with a single hidden layer (1024 nodes), Relu and l2 regularization.

Minibatch loss at step 10000: 123.963661

Minibatch accuracy: 45.3%

Validation accuracy: 85.3%

Test accuracy: 91.4%

With a dropout and relu and l2 regularizer, single hidden layer 1024 node.

Yaroslav Bulatov,

Thank you for the fun and challenging dataset.

How were the names of the files chosen?

I'm working on renaming each one to the phash value of the image. It looks like the names might already be the result of a hash.

Check out the Udacity course in deep learning, made by Google. They use this dataset extensively and show some really powerful techniques. The goal of the last assignment was to experiment with this techniques to find the best accuracy using a regular multi-layer perceptron. I have a pretty beefy machine: 6600K OC, 2x GTX 970 OC, 16gb DDR4, Samsung 950 Pro; so I set up a decent sized network and let it train for a while.

My best network gets:

Test accuracy: 97.4%

Validation accuracy: 91.9%

Minibatch accuracy: 97.9%

First I applied a Phash to every image and removed any with direct collisions. Then I split the large folder into ~320k training and ~80k validation. I used ~17k in the small folder for testing. Trained on mini-batches using SGD on the cross-entropy, dropout between each layer and an exponentially decaying learning rate. The network has three hidden layers with RELU units, plus a standard softmax output layer.

Here are the parameters:

Mini-batch size: 1024

Hidden layer 1 size: 4096

Hidden layer 2 size: 2048

Hidden layer 3 size: 1024

Initial learning rate: 0.1

Dropout probability: 0.5

I ran this for 150k iterations, took an hour and half using one GPU. Learning pretty much stopped at 60k, but the model never began to overfit. I believe that is because the dataset is so large and the dropout. Even at the end of all that training with a good size network the mini-batch accuracy still did not reach 100% so learning could continue, albeit slowly.

The next assignment is to use a convolutional network, which looks promising. I'll try to post those results too.

Could you make your code available? Or at least say which parameters you have use to the exponentially decaying learning rate? Did you use l2 regularization (if yes, with which regularization factor?) I tried to use the same network as you did and it simply doesn't converge.

Test accuracy: 98.09%

With a CNN layout as follows:

3 x convolutional (3x3)

max pooling (2x2)

dropout (0.25)

3 x convolutional (3x3)

max pooling (2x2)

dropout (0.25)

dense (4*N)

dropout (0.5)

dense (2*N)

dropout (0.5)

dense (N)

dropout (0.5)

softmax (10)

N is the number of pixel in the images. All layers use relu activation. I also used some zero padding before each convolutional layer. The network was trained with Adadelta. It took ~45 iterations with an early stopping at patience 10. As a final step I ran SGD with the same early stopping and decaying learning rate starting at 0.1. It ran about 15 iterations. Evaluating the network on the training set, the accuracy was 99.07% and 94.25% on the validation set.

Minibatch loss at step 4999: 0.901939

Minibatch accuracy: 75.0%

Validation accuracy: 87.3%

Test accuracy: 93.3% @step=4999

Model saved in file: save/myconvnet_5000

I used a architecture similar to LeNet, and it seems to be better as step get larger.

Where can I download notMNIST? The link above goes to an account that has been suspended.

Not sure if this is the complete dataset, but the Udacity course on Deep Learning using notMNIST provides the following links:

http://commondatastorage.googleapis.com/books1000/notMNIST_large.tar.gz

http://commondatastorage.googleapis.com/books1000/notMNIST_small.tar.gz

Test accuracy: 96.98%

With a CNN layout with following configurations, which is similar to [LeNet5](http://culurciello.github.io/tech/2016/06/04/nets.html)

However there is little difference

convolutional (3x3x8)

max pooling (2x2)

dropout (0.7)

relu

convolutional (3x3x16)

max pooling (2x2)

dropout (0.7)

relu

convolutional (3x3x32)

avg pooling (2x2): according to above article

dropout (0.7)

relu

fully-connected layer (265 features)

relu

dropout (0.7)

fully-connected layer (128 features)

relu

dropout (0.7)

softmax (10)

decaying learning rate starting at 0.1

batch_size: 128

Training accuracy: 93.4%

Validation accuracy: 92.8%

Accuracy: 96.1 without convolution (assignment 3 in TensorFlow course)

Using Xavier initialization significantly boosted my results. Network specifications:

1. Batch size = 2048

2. Hidden units: 4096, 2048, 1024

3. Adam optimizer with 0.0001 learning rate

4. Dropout on each hidden layer

5. Xavier initialization

Hi

I'm trying to use tensorflow to do character recognition. I am able to use your dataset(A-J) and get some data from char74k dataset (from K to Z) to train character data and predict. but the char74k set is a pretty limited set and is not enough to get a good accuracy. Have you posted anything similar for characters from K to Z?

no convolution, 1 hidden layer 94.4 % with test set

batch size 128

L2 regularization beta 0 (no L2 regularization)

initialize w with deviation 0.03

initialize bias with all 0

Learning rate 0.5 (fix, not decay)

single hidden layer unit # 1024

dropout_keepratio 1 (no dropout)

I'm following udacity tutorial.

It's strange that whenever i put L2 regularization, dropout, Learning rate decay, the test accuracy falls. I can't figure out why.

Multi Layer Neural Net without convolution - Test Accuracy = 94.4%

Architeture

3 Layer Neural Network(No convolution) = input-784, hidden-526, output=10

L2- Regularization with lambda(regularization parameter) = .001

Number of steps = 3000

Batch size = 500

2 hidden Layers ( Toal 4 layers ) - without convolution - Test Accuracy = 95.8 %

Architecture

3 Layer Neural Network(No convolution) = input-784, hidden1-960, hidden2=650 output=10

L2- Regularization with lambda(regularization parameter) = .0005

Number of steps = 75000

Batch size = 1000

Minibatch accuracy: 93.2%

Validation accuracy: 91.2%

Test accuracy: 96.3%

After 10000 steps.

Architecture:

Two hidden layers:

num_hidden_nodes = 1024

num_hidden_nodes_2 = 100

Both with Relu inputs. Cross entropy + L2 regularization (beta = 1.3e-4).

SGD, batch size 400.

Most importantly, weights were initialized with truncated normal distro. with sigma = 0.01.

Exponential decay starting at 0.5, 0.65 decay_rate every 1000 steps.

Using Keras on an average gaming laptop with moderate GPU, training took less than 2' on the full (udacity) training set of 200.000 samples, using 10.000 validation samples and measuring accuracy on separate test set of 10.000 samples.

With a simple multilayer network, I reached 96.66%

With KERAS, the code for the network itself is really simple:

batch_size = 128

nb_classes = 10

nb_epoch = 20

model = Sequential()

model.add(Dense(1024, input_shape=(784,)))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(256))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(10))

model.add(Activation('softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

#optimizer=RMSprop(),

optimizer='adagrad',

#optimizer='adadelta',

metrics=['accuracy'])

history = model.fit(train_dataset, train_labels,

batch_size=batch_size, nb_epoch=nb_epoch,

verbose=1, validation_data=(valid_dataset, valid_labels))

score = model.evaluate(test_dataset, test_labels, verbose=0)

If you want to generate your own dataset like notMNIST, you should try not_notMNIST

My final result is 96.23% accuracy. Network architecture (built with Keras):

conv(3x3x32)

maxp(2x2)

dropout(0.05)

conv(3x3x16)

maxp(2x2)

dropout(0.05)

dense(128, relu)

dense(64, relu)

dense(10, softmax)

I used SGD with default params. Also got 92.03% on valid dataset, 92.24% on train dataset. Seems that it is global tendency that test score is higher,

97.2% on a fully connected net.

At last iteration, 100k:

Minibatch accuracy: 99.0%

Validation accuracy: 92.2%

Test accuracy: 97.2%

Architecture:

3 hidden layers, 4096 - 3072 - 1024, with relu and 0.5 dropout

Xavier weight init

Batch size 200

Data sets original (200k train, 10k valid, 10k test), no further preprocessing

Loss: softmax_cross_entropy_with_logits + L2 regularization on weights with weight of 1e-4

Learning rate 0.3 with decay of 0.96 every 1000 iterations

Total 100k iterations

[edit - I forgot the dropout on first post]

Test accuracy: 96.12% with only 5000 iterations on a convolutional network with two conv layers and a final fully connected layer.

Minibatch of 50 images was used.

On a very simple 1 hidden layer network without regularization I also get:

Minibatch accuracy: 89.8%

Validation accuracy: 82.9%

Test accuracy: 89.8%

I've seen many other users reporting Test accuracy which is significantly higher than validation accuracy.

Validation and Test are the same size in my case. Is a higher test score reasonable or is it just chance? Should I consider the worst between test and validation as the expected performance of my network?

Gianni

I use 2 hidden layers and GradientDescentOptimizer, but the loss is nan. Why?

Try to reduce your learning rate

96.2% on a fully connected net.

setps, 200000:

batch 200 accuracy: 94.0%

test accuracy: 96.2%

https://github.com/ms03001620/NotMnist

test acc 98.3%

mini-batch train acc 95.7%

val acc 94.2%

Techniques: "shallow" resnet (used val set to select arch), dropout, horizontal + vertical shift data augmentation, reduce lr on plateaus.

implementation

Test Accuracy 95.5%

with batch size = 128, number of iterations = 10k

Three 5x5 convoution layers of depth 16, 32, 64 respectively

Three hidden layers with number of hidden nodes 256, 128 and 64 respectively

Dropout 0.7

Learning decay starting with 0.2 learning rate

https://sandipanweb.wordpress.com/2017/08/03/deep-learning-with-tensorflow-in-python-2/

Test accuracy: 97.2%

Implementation:

2 CNNs with max pooling followed by a 1 layer fully-connected NN:

Patch size = 7x7

Stride for CNN = 1

Size of pooling size = 2x2

Stride for Pooling = 2

Depth = 50, 100

Final layer nodes = 512

Dropout_keep_probe = 0.7

Managed to achieve 97.3% test score!

Used 5 hidden layers, batch_size = 256, adam optimisation with initial learning rate of 1e-4, 200,000 steps. Would be happy to share details, code etc. if anybody is interested.

Test accuracy: 98.0%

Implementation:

2 CNNs with max pooling followed by a 1 layer fully-connected NN:

Patch size = 5x5

Stride for CNN = 1

Size of pooling size = 2x2

Stride for Pooling = 2

Depth = 50, 100

Hidden layer Nodes in FCNN = 512

Dropout_keep_probe = init: 95%, decaying to 70%

Udacity Deep Learning course challenged me to get as high accuracy as I can using only dense layers, without any convolutions.

09-16 04:03:04.724 assignment_03_regularization.py:470 INFO Train loss: 0.167651, train accuracy: 97.99%

09-16 04:03:04.725 assignment_03_regularization.py:473 INFO Test loss: 0.256166, TEST ACCURACY: 96.51% BEST ACCURACY 96.64% <<<<<<<

Managed to achieve only as high as 96.6% with the following model:

- 5 fully connected layers 2048-1024-1024-1024-512

- 0.5 dropout

- batch normalization

- weight decay with 0.00001 scale

- batch 128 images

- Adam optimizer with starting LR=1e-4

- Xavier weight initialization (this is critical!)

This is on "sanitized" test dataset, where I removed all images that were identical or close to some images in training data. Without this sanitizing, it would've probably been a bit better.

Trained that for 2 hours on GTX1060, it continued to climb higher, but slowly.

Code is here: https://github.com/alex-petrenko/udacity-deep-learning/blob/master/assignment_03_regularization.py

(function is called train_deeper_better())

Simple convnet achieved 98.19% on test, code here: https://github.com/alex-petrenko/udacity-deep-learning/blob/14714ee4151b798cde0a31a94ac65e08b87d0f65/assignment_04_convolutions.py#L39

(5,5)->(5,5)->pool->(3,3)->(3,3)->pool->fc1024->fc1024->logits

INFO Starting new epoch #121!

INFO Minibatch loss: 0.150696, reg loss: 0.041653, accuracy: 96.88%

INFO Train loss: 0.068505, train accuracy: 99.28%

INFO Test loss: 0.118685, TEST ACCURACY: 98.05% BEST ACCURACY 98.19% <<<<<<<

Test accuracy: 96.7%

Implementation:

* 2 hidden layers of 1024 & 256 nodes

* weight initialization using gaussian random distribution with stddev = 2/sqrt(size of layer input)

* minibatch size of 128

* 30 epochs -each epoch full pass over train data set, randomly shuffled by minibatches (200000 / 128 = 1563 steps per epoch)

* learning rate 0.1

* dropout with keep_prob = 0.9

* no L2 regularization

After epoch 30:

train accuracy = 98.0%

dev accuracy = 92.0%

test accuracy = 96.7%

My best straight-forward CNN:

C5x4-C19x8-C5x16-P2-C7x64-P2-C3x256-P3S-C1x1024-C1x512-F2048-F64-F10

where

C5x4 = convolution with 5x5 kernel and 4 maps output

P3S = pooling with 3x3 size of type SAME

ReLU

initial weight SD: 0.05 for conv layers, Xavier for full layers

max pooling

full layer dropout 0,6

conv layer dropout 0,1

conv layer dropout before pooling

shuffle train dataset after each epoch

momentum optimizer with learning rate 0.05

batch size 2048

after 470 epochs (early stop):

train accuracy 99.6%

validation accuracy 94.6%

test accuracy 98.2%

This configuration was evolved looking for hyperparameter optimization, and came up at the 103rd configuration try. Further runs of another 34 configurations did not improve it.

During training, I have seen as much as 98.4% on test set, but corresponding to lower validation accuracy. In general, several runs of the same configuration could end up with 0.3% difference in validation accuracy. So ultimately one could run several times the winner configuration until the lucky initial weights combination is reached.

I got 94.7% of accuracy following the udacity's deep learning course dataset partition:

200000 for training

10000 for validation and testing each.

I took the lenet5 architecture as inspiration and added some tricks:

Conv with depth 6

Max pooling

Conv with depth 16

Max pooling

Conv with depth 120

Fully Connected layer with 84 units

The kernel size was 5x5 for all the conv layers with stride of 1.

I used L2 regularization in the fully connected weights with beta = 3 * 1e-3 and using softmax cross entropy in the loss function.

Finally, I added dropout in the FC layer with prob = 0.5.

The optimizer is SGD (minibatch size = 128) with exponential decay learning rate:

initial Lr: 0.1

decay steps: 100000

decay rate: 0.96

The learning rate is decayed at discrete intervals.

This is a relatively small net for the current standards, so it runs pretty fast :)

If it is run fore more steps it can achieve up to 96% in accuracy with around 5k steps. I suspect it can achieve even more, but I don't have either patience or a decent GPU :P

Berita Terkeren SeIndonesia

This information you provided in the blog that is really unique I love it!! Thanks for sharing such a great blog Keep posting..

Learn How to Fix a Slow iPhone

I just released a similar dataset for Chinese characters.

https://medium.com/@peterburkimsher/making-of-a-chinese-characters-dataset-92d4065cc7cc

The notMNIST data is being used in CS231n, but I wanted to try something different. So I made my own! Please let me know if it's useful.

Very informative blog, thanks for sharing

Machine Learning Training in Chennai | Machine Learning Training Institute in Chennai

Useful Article.

Best MATLAB Training Institute in Jaipur

API Testing Questions

REST API Interview Questions

API Testing Questions

nice information provided for more visit Machine Learning Training in Texas

thanks for the great post.. this information are very useful to who is looking for the Cognos Online training institute. keep sharing

You are sharing very good information.

Matlab Training in Chennai | Matlab Training Institute in Chennai

Thanks for the post got to more on Student Loans In India

Nice post. Keep updating Artificial intelligence Online Trining

These guys are really great job in the data sector by completing the whole data function and keep it in decorated way. visit site is the best option for the writing service help.

I am that kind of guy who love to do every sort of work by manually as if there has a mistake it let me know the facts for next time. see more details here and you'll be get and helpful ideas on the subject of academic papers writing.

In recent time this joint admission is really a serious matter where it could be a matter of good thinking on admission side. http://www.orthopedicresidency.com/why-our-orthopedic-residency-personal-statement/ for the students that is very helpful for the writing services.

After reading this blog i very strong in this topics and this blog really helpful to all.Ruby on Rails Online Course Bangalore

Your blog is very informative. Explained Perfectly. Keep sharing this kind of information in your blog.

IoT Training in Chennai | IoT Courses in Chennai

thank youuu for sharing....

Berita Keren

Bandar66

Bandar Sbobet

This notmist dataset is really important to make the whole site http://www.academicghostwriter.org/academic-writing-services/ this look valuable and make it happens for the rest of the time.

You might need these helpful link for the dataset of your works and you could literally get those things done easily with one hand.

Thanks for sharing this information and keep updating us.

web design company in chennai

digital marketing agency in chennai

Great article and helpful Information and Thanks for sharing this article and also visit we are leading the Best MATLAB Training in Jodhpur, web development & designing, Python, java, android, iPhone, PHP training institute in jodhpur

Thank you for sharing wonderful information with us to get some idea about that content. check it once through

Best Workday Online Training From India

Best Mule Esb Online Training From India

Best Devops Online Training From India

PCB Design Training in Bangalore offered by myTectra. India's No.1 PCB Design Training Institute. Classroom, Online and Corporate training in PCB Design

pcb design training in bangalore

DIAC Automation Provide very good Automation training in Noida with the help of multiple brands of Industrial automation products. We Provide Advanced training in the layout, wiring, programming, troubleshooting and implementation of PLCs in an industrial enviornment, using hands on experimentation on real industrial PLCs and other industrial components such as: sensors, mechanical switches and relays to produce real programming experiments and control situations. Call @9953489987,,9310096831.

Thanks for Sharing,

Keep Updating

https://bitaacademy.com/

Human Resource (HR) Interview Questions

Javascript Interview Questions

Selenium Interview Questions and Answers

Thank you. it's a nice blog, keep posting.

Click here:

Data Science Online Training

I accept there are numerous more pleasurable open doors ahead for people that took a gander at your site. I wish to show thanks to you just for bailing me out of this particular trouble. As a result of checking through the net and meeting techniques that were not productive, I thought my life was done.

Angularjs training in Chennai

Angularjs training in Velachery

hi i am learning python programming.. i would like to be a Machine Learning Expert.. after learning python.. what should be the next framework i should learn before start learning machine language

Digital Marketing Course in bangalore

Thank you for sharing this information it has helped me to know more about Educational Loan

The information which you have provided is very good. It is very useful who is looking for at machine learning online training Bangalore

nice post..best online blood test

best online lab testing

lab test results online

online blood sugar test

really helpful. Thanks a lot.

Learn Digital Academy

nice blog, jangan lupa kunjungi juga Sedot WC Bojonegoro

I appreciate this informative blogpost. Thanks for sharing with us and find more details of Educational Trainers Exporter

Nice information.. thanks for sharing this useful information

best android training center in Marathahalli

best android development institute in Marathahalli

android training institutes in Marathahalli

ios training in Marathahalli

android training in Marathahalli

mobile app development training in Marathahalli

Nice post..

salesforce training in btm

salesforce admin training in btm

salesforce developer training in btm

This post is very good and helpfull and I must appreciate you for this.. Keep posting like this..

Technoglobe is best for Machine Learning training in Jaipur

really helpful.. keep on sharing

devops course in bangalore

best devops training in bangalore

Devops certification training in bangalore

devops training in bangalore

devops training institute in bangalore

You are sharing very good information.

best android training center in Marathahalli

best android development institute in Marathahalli

android training institutes in Marathahalli

ios training in Marathahalli

android training in Marathahalli

mobile app development training in Marathahalli

That's really awesome, love it.

vo genesis is one of the best voiceover products IMO.

nice post..Retail Software Solution Chennai

Retail Software Companies in Chennai

ERP Solution Providers in Chennai

Thank you for your post. This is excellent information. It is amazing and wonderful to visit your site.

internships provider in hyderabad

internship in hyderabad for cse

Check out wide range of designer brand iPhone case in a variety of shapes, colors and sizes at Uneek Inc.

Existing without the answers to the difficulties you’ve sorted out through this guide is a critical case, as well as the kind which could have badly affected my entire career if I had not discovered your website.

nebosh course in chennai

It is really a great work and the way in which you are sharing the knowledge is excellent. Thanks for your informative article

occupational health and safety course in chennai

I want to thank for sharing this blog, really great and informative. Share more stuff like this.

DevOps course in Chennai

Best devOps Training in Chennai

Amazon web services Training in Chennai

AWS Certification in Chennai

Data Analytics Courses in Chennai

Big Data Analytics Courses in Chennai

DevOps Training in Anna Nagar

DevOps Training in T Nagar

Industries, construction companies, and manufacturers frequently hire the assistance of professionals to prepare their property for use or sale. Professionals who offer industrial cleaning Sydney use the equipment and tools required to clean heavy machinery, large workstations and even equipment used by industries.

Thank you for taking the time to write about this much needed subject. I felt that your remarks on this technology is helpful and were especially timely.

Right now, DevOps is currently a popular model currently organizations all over the world moving towards to it. Your post gave a clear idea about knowing the DevOps model and its importance.

devops course fees in chennai | devops training in chennai with placement | devops training in chennai omr | best devops training in chennai quora | devops foundation certification chennai

very good post!!! Thanks for sharing with us... It is more useful for us...

SEO Training in Coimbatore

seo course in coimbatore

RPA training in bangalore

Selenium Training in Bangalore

Java Training in Madurai

Oracle Training in Coimbatore

PHP Training in Coimbatore

Great Blog!!! Nice to read... Thanks for sharing with us...

embedded systems training in coimbatore

Embedded course in Coimbatore

embedded training in coimbatore

PHP Course in Madurai

Spoken English Class in Madurai

Selenium Training in Coimbatore

SEO Training in Coimbatore

Web Designing Course in Madurai

Useful blog to make dataset.Thank you for sharing.Keep posting.

honor service center Chennai

honor service center velachery

honor service center in vadapalani

honor service

honor mobile service center

honor service centres in Chennai

honor service center near me

Learn Free SELENIUM | JAVA | TestNG Tutorials using http://www.stqatools.com with Programs and Interview Questions and Answers.

Thank You !!!

http://stqatools.com/java/

http://stqatools.com/selenium/

http://stqatools.com/testng/

Awesome post.Vast contents in few line description makes readers quite interesting.Good info.Thanks for sharing such a wonderful article.

apple service center in chennai

iphone service center in chennai

lg mobile service center in chennai

oppo service center in chennai

coolpad service center in chennai

mobile service center

mobile service center near me

Nice Article !!!

buy luxurious villas in chennai

uber luxury homes in chennai

extreme luxury homes in chennai

good job and thanks for sharing such a good blog You’re doing a great job.Keep it up !!

machine learning training in jaipur

python training in jaipur

best data science training training in jaipur

Nice post!Everything about the future(học toán cho trẻ mẫu giáo) is uncertain, but one thing is certain: God has set tomorrow for all of us(toán mẫu giáo 5 tuổi). We must now trust him and in this regard, you must be(cách dạy bé học số) very patient.

nice course. thanks for sharing this post.

Networking Training in Delhi

Thanks for sharing your blog..!

pmp training in chennai | best pmp training in chennai

Very nice post here thanks for it .I always like and such a super contents of these post.Excellent and very cool idea and great content of different kinds of the valuable information's.

Check out : best hadoop training in chennai

hadoop big data training in chennai

best institute for big data in chennai

big data course fees in chennai

Full Stack Development Training in Chennai Searching for Full Stack Development training in chennai ? Bita Academy is the No 1 Training Institute in Chennai. Call for more details.

Custom Software Development Services in Hong Kong,

API Development Company Hong Kong,

Top Custom Web Application Development Company in Hong Kong,

iOS App Development Services Hong Kong,

iPhone App Development Services in Hong Kong,

Android App Development Services Hong Kong,

Web Designing Services Hong Kong,

ERP Software Development Services Hong Kong,

SMO Services in Hong Kong,

Top Graphic Designing Agency in Hong Kong,

Really Happy to say your post is very interesting. Keep sharing your information regularly for my future reference. Thanks Again.

Check Out:

big data training in chennai chennai tamil nadu

big data training in velachery

big data hadoop training in velachery

Kursus Teknisi Service HP

Indonesian Courses

Service Center iPhone Bandar Lampung

Jasa Kursus Service HP

Service HP Pringsewu LampungService Center Acer Indonesian

Makalah Usaha Bisnis

Ilmu Konten

PT Lampung Service

Sungguh Konten yang sangat menarik.

Saya tidak pernah melihat konten seperti yang ada pada semua blog Anda.

Jangan lupa lihat juga konten saya disini

situs casino online

Terimakasih.. :)

Daily Transport Service

transporters in delhi

transporters in mumbai

transporters in ahmedabad

Transport Service

Hey there! I know this is kind of off-topic, but I’d figured I’d ask. Would you be interested in exchanging links or maybe guest authoring a blog post or vice-versa?

nebosh course in chennai

offshore safety course in chennai

Cara Root

Camera

Android Error

iPhone

Kursus Terbaik

Penyebab HP Mati

iCloud

Ringtone

Service LCD LED

An astounding web diary I visit this blog, it's inconceivably magnificent. Strangely, in this current blog's substance

Oracle Fusion Financials Online Training

Oracle Fusion HCM Online Training

Oracle Fusion SCM Online Training

Best Course Indonesia

Easy Indonesian CoursesLearning Indonesia

Indonesia Courses

Indonesia Courses

www.lampungservice.com

Service HP

lampungservice.com

Makalah Bisnisilmu konten

Lampung Service

Service Center Vivo

Cara Menghidupkan HP Mati Total Baterai Tanam

Service Center Samsung

Kursus Service HP Bandar Lampung

Kursus Service HP Bandar Lampung

Kursus Service HP Tasikmalaya

Bimbel Lampung

Do not use all of these Private Money Lender here.They are located in Nigeria, Ghana Turkey, France and Israel.My name is Mrs.Emily Michael, I am from Canada. Have you been looking for a loan?Do you need an urgent personal or business loan?contact Fast Legitimate Loan Approval he help me with a loan of $95,000 some days ago after been scammed of $12,000 from a woman claiming to be a loan lender from Nigeria but i thank God today that i got my loan worth $95,000.Feel free to contact the company for a genuine financial contact Email:(creditloan11@gmail.com)

nigerian newspapers today

nigeria daily news

news today

naija news

latest nigeria news

latest news in nigeria

nigeria breaking news

nigerian newspapers today

nigeria news today

punch newspaper

Newspaper Headlines

We offer real credit services to the general public, in order to improve mankind and save those who have bad credit. Credits We have certified and 100% reliable. We make sure that our customers are dealing with every encouragement with the best resources. Our service credit is very easy and effective. For more details about our credit, please contact us via E-mail: tivolifinancialhome@gmail.com WhatsApp us: +919873255004

I have found that this site is very informative, interesting and very well written. keep up the nice high quality writing

Communication and Network Concepts

Thanks for the nice post and it is very useful for us and also very informative information to read.

Apple iPhone Service Center in Chennai

Oneplus Service Center in Chennai

This is an informative post and it is very useful and knowledgeable. therefore, I would like to thank you for the efforts you have made in writing this article.

iphone app training course

iphone training classes in bangalore

iphone training

https://youtubelampung.blogspot.com/

https://konsultanhp.wordpress.com/

https://komunitasyoutuberindonesia.wordpress.com

https://youtuberandroid.wordpress.com

https://youtuberterbaikindonesia.wordpress.com

https://jokowidodoandroid.wordpress.comhttps://bupatibandarlampung.wordpress.com

Nice post. Thanks for sharing this useful information.

Best island resorts in kerala

Thank you for your post. This is excellent information. It is amazing and wonderful to visit your site.

Scholarship preparation Classes at Cranbourne West

nice blog. keep up the good work

lg washing machine service center in coimbatore

This is really good blog information thanks for sharing .I am really impressed with your writing abilities

โปรโมชั่นGclub ของทางทีมงานตอนนี้แจกฟรีโบนัส 50%

เพียงแค่คุณสมัคร Gclub กับทางทีมงานของเราเพียงเท่านั้น

ร่วมมาเป็นส่วนหนึ่งกับเว็บไซต์คาสิโนออนไลน์ของเราได้เลยค่ะ

สมัครสมาชิกที่นี่ >>> Gclub online

Really very interesting content.

I have never seen content like everything on your blog.

Don't forget to also see my content Thank you ... :)

situs judi slot online terpercaya

situs casino online terpercaya

Thank you for sharing such great information very useful to us.

Summer Training in Jaipur

Industrial Training in Jaipur

Machine Learning Training in Jaipur

CCNA training in Jaipur

Nice content

SUMOBOLA - SITUS TARUHAN BOLA JALAN DAN MIX PARLAY TERBAIK DAN TERPERCAYA

Bola Jalan

Mix Parlay

Sumo Bola

Judi Bola

Truly commendable piece of information allocated by you. I am contented reading this worthwhile information here and I am sure this might be worthwhile for a majority of apprentices. Keep up with this tremendous work and continue updating.

English practice App | English speaking app

https://g.co/kgs/GGvnG8

https://bateraitanam.blogspot.com

https://bimbellampung.blogspot.com

lampungservice.com

Really very happy to say that your post is very interesting. I never stop myself to say something about it. You did a great job. Keep it up.

We have an excellent IT courses training institute in Hyderabad. We are offering a number of courses that are very trendy in the IT industry. For further information, please once go through our site. DevOps Training In Hyderabad

I was searching for loan to sort out my bills& debts, then i saw comments about Blank ATM Credit Card that can be hacked to withdraw money from any ATM machines around you . I doubted thus but decided to give it a try by contacting {blankatm156@gmail.com} they responded with their guidelines on how the card works. I was assured that the card can withdraw $5,000 instant per day & was credited with $50,000 so i requested for one & paid the delivery fee to obtain the card, after 24 hours later, i was shock to see the UPS agent in my resident with a parcel{card} i signed and went back inside and confirmed the card work's after the agent left. This is no doubts because i have the card & has made used of the card. This hackers are USA based hackers set out to help people with financial freedom!! Contact these email if you wants to get rich with this Via: blankatm156@gmail.com

Nice Blog, Thank you for sharing this information. Its very helpful.

Best ios training in Hyderabad

apple ios training institutes Hyderabad

ios app development course

Mobile App Training Institutes

A course in AI allows you to learn those skills. The advanced topics of convolutional neural networks, training deep networks, and recurrent neural networks are comprehended and mastered through the AI course. Many significant fields like customer service, financial services and the field of healthcare employ some of the major applications of Artificial Intelligence. The functioning of these AI applications is learned through an AI Course .

Wonderful blog!!! the article which you have shared is informative for us... thanks for it...

Digital Marketing Training in Coimbatore

digital marketing classes in coimbatore

digital marketing courses in bangalore

digital marketing institute in bangalore

PHP Course in Madurai

Spoken English Class in Madurai

Selenium Training in Coimbatore

SEO Training in Coimbatore

Web Designing Course in Madurai

Nice blog with excellent information. Thank you. keep sharing.

PERL Scripting Online Training

Thanks for sharing such a wonderful blog on Machine learning.This blog contains so much data about Machine learning ,like if anyone who is searching for the Machine learning data will easily grab the knowledge of Machine learning from this .Requested you to please keep sharing these type of useful content so that other can get benefit from your shared content.

Thanks and Regards,

Top institutes for machine learning in chennai

best machine learning institute in chennai

artificial intelligence and machine learning course in chennai

Excellent blogs!!!!you have for sharing them effect information..we developer very learning to easy.

Apple iPhone Service Center in Chennai Anna Nagar

Nice post.Thanks to sharing your information. Keep posting.

Fire and Safety Course in Chennai

Safety Courses in Chennai

IOSH Course in Chennai

NEBOSH Safety Course in Chennai

NEBOSH Course in Chennai

ISO Consultants in Chennai

Safety Audit Consultants

McAfee.com/Activate Since the world is developing each day with new computerized advances, digital dangers, malware, information, and harming diseases have additionally turned out to be increasingly more progressed with every day. These digital contaminations harm a gadget or documents in different ways.McAfee.com/Activate

office.com/Setup is a software which is used by almost all company and business and even by individuals For all their office activities or for personal use. It has excels, word, and ppt as their constituent are most widely used apps. Install your office.com/Setup by downloading now.

McAfee.com/ActivateMcafee is a antivirus software for laptop, PC, Mac for internet security form viruses and malware. Enter code to get started and protect while online surfing and downloading. McAfee.com/Activate

If you don"t mind proceed with this extraordinary work and I anticipate a greater amount of your magnificent blog entriesmachine learning training in bangalore

We are really grateful for your blog post. You will find a lot of approaches after visiting your post. Great work

machine learning course in bangalore

. Our repair specialists are committed to serve you right the moment you contact us. LG Microwave Oven Service Center in Hyderabad Our repair center render quick and best repair services at door step.

LG Microwave Oven Service Center in Hyderabad

You can even appoint one of our service engineers to arrive at your place and carry out the repair and solve even more intricate issues of your microwave ovens. Our service engineer will reach you shortly upon your request or call and solve your problems.

www.mcafee.com/activate registered trademarks, company names, product names and brand names are the property of their respective owners, and mcafee.com/activate disclaims any ownership in such third-party marks. The use of any third party trademarks, logos, or brand names is for informational purposes only, and does not imply an endorsement by mfmcafee.com or vice versa or that such trademark owner has authorized mfmcafee.com to promote its products or services.

www.office.com/setup is an independent support and service provider for the most secure remote technical services for all Office products. Our independent support services offer an instant support for all software related errors in the devices, laptops, desktops and peripherals. We have no link or affiliation with any of the brand or third-party company as we independently offer support service for all the product errors you face while using the Office. If your product is under warranty, then you may also avail our support services for free from manufacturer’s official website office.com/setup.

mcafee activate is an independent support and service provider for the most secure remote technical services for all norton products. Our independent support services offer an instant support for all software related errors in the devices, laptops, desktops and peripherals. We have no link or affiliation with any of the brand or third-party company as we independently offer support service for all the product errors you face while using the norton. If your product is under warranty, then you may also avail our support services for free from manufacturer’s official website norton setup.

Excellent Blog! I would like to thank for the efforts you have made in writing this post. I am hoping the same best work from you in the future as well. I wanted to thank you for this websites! Thanks for sharing. Great websites! Now please do visit our website which will be very helpful.

machine learning course bangalore

research training in chennai

android training in chennai

big data training in chennai

core java training in chennai

advance java training in chennai

UV Gullas College of MedicineUV Gullas College of Medicine- Do you Want to do MBBS in Philippines? Then make your decision with us.! Here no need any entrance examination.

This is very interesting article thanx for your knowledge sharing.this is my website is mechanical Engineering related and one of best site .i hope you are like my website .one vista and plzz checkout my site thank you, sir.

mechanical engineering

digital marketing training in chennai

embedded system training in chennai

networking training in chennai

matlab training in chennai

oracle training in chennai

Really very interesting content.

I have never seen content like everything on your blog.

Don't forget to also see my content Thank you ... :)

situs judi poker

Imate odličen članek. Želim vam produktiven dan

giảo cổ lam giảm cân

giảo cổ lam giảm béo

giảo cổ lam giá bao nhiêu

giảo cổ lam ở đâu tốt nhất

me project centers in chennai Real time projects centers provide for bulk best final year Cse, ece based IEEE me, mtech, be, BTech, MSC, mca, ms, MBA, BSC, BCA, mini, Ph.D., PHP, diploma project in Chennai for Engineering students in java, dot net, android, VLSI, Matlab, robotics, raspberry pi, python, embedded system, Iot, and Arduino . We are one of the leading IEEE project Center in Chennai.

Good Day Sir/Madam: Do you need an urgent loan to finance your business or in any purpose? We are certified and legitimate and international licensed loan lender we offer loans to Business firms. Individuals, companies firms, corporate bodies at an affordable interest rate of 3%. It might be a short or long term loan or even if you have poor credit. We shall process your loan as soon as we receive your application. We are an independent financial institution. We have built up an excellent reputation over the years in providing various types of loans to thousands of our customers. We offer Educational loan, Business loan, home loan, Agricultural loan, Personal loan, Auto loan with either a good or bad credit history. If you are interested in our above loan offer you are advice to fill the below information and return to us for more details. You can contact us with this email standardonlineinvestment@gmail.com we shall respond to you as soon as we receive your loan application details below.

First name:

Middle name:

Date of birth (yyyy-mm-dd):

Gender:

Marital status:

Total Amount Needed:

Time Duration:

Address:

Currency Needed

City:

State/province:

Zip/postal code:

Country:

Phone:

Mobile/cellular:

Monthly Income:

Occupation:

Which sites did you know about us.....

standardonlineinvestment@gmail.com for immediate attention. Contact

us now and get an urgent loan within two (2) days!!!

Regards,

Mr Abdul Muqse

thanks for sharing this informations

aws training center in chennai

aws training in chennai

aws training institute in chennai

best angularjs training in chennai

angular js training in sholinganallur

angularjs training in chennai

azure training in chennai

Thank you for excellent article.You made an article that is interesting.

Tavera car for rent in coimbatore|Indica car for rent in coimbatore|innova car for rent in coimbatore|mini bus for rent in coimbatore|tempo traveller for rent in coimbatore|kodaikanal tour package from chennai

Keep on the good work and write more article like this...

Great work !!!!Congratulations for this blog

Sharp

Lampung

Metroyoutube

youtube

lampung

kuota

Indonesia

Lampung

lampung

Kursus

Kursus

ninonurmadi.com

ninonurmadi.com

kursus

Lampung

Very gossipy post! I'm learning a lot from your articles. Keep us updated by sharing more such posts. it is helpful. Thanks for sharing.

dot net training in chennai

UVGullasmedicalcollegeGet #MBBSinAbroad for low fees @ UV Gullas College of Medicine in Philippines. We are one of the best MBBS Universities in abroad.

vlsa global services providing best ece project in chennai.VLSA Global Services is the best ece projects in chennai , VLSA Global Services offers ece projects in Chennai and IEEE 2013 Final Year projects for Engineering students in JAVA, Dot Net, Android, Oracle, matlab, embedded system, python and PHP technologies

ipt training in chennai for your final year. DLK Career Development Center conduct national level inplant training programs.

final year project centers provides best projects for BE,ME,BCA,MCA and all other streams.we are best in doing all kinds of mca projects in chennai.we refer project centers students the great topic to select with and implement their ideas with new technologies.

I really like what you write in this blog, I also have some relevant Information about if you want more information. Thanks for sharing a piece of useful information.. we have learned so much information from your blog mtech project centers in chennai..... keep sharing

The explanation you given on machine learning is very useful. Thanks for sharing this innovative blog. Keep posting more in future.

Interior Designers in Chennai

Interior Decorators in Chennai

Best Interior Designers in Chennai

Home Interior designers in Chennai

Modular Kitchen in Chennai

This is a decent post. This post gives genuinely quality data. I'm certainly going to investigate it. Actually quite valuable tips are given here. Much obliged to you to such an extent. Keep doing awesome. To know more information about

Contact us :- https://www.login4ites.com/

THANKS FOR THE INFORMATION....

Digital Marketing Internship Program in BangaloreDigital Marketing Internship Program in Bangalore

phd projects in chennaicenters provide for bulk best final year Cse, ece based IEEE me, mtech, be, BTech, MSC, mca, ms, MBA, BSC, BCA, mini, Ph.D., PHP, diploma project in Chennai for Engineering students in java, dot net, android, VLSI, Matlab, robotics, raspberry pi, python, embedded system, Iot, and Arduino . We are one of the leading IEEE project Center in Chennai.

Good job and thanks for sharing such a good blog You’re doing a great job. Keep it up !!

Python Training in Chennai | Best Python Training in Chennai |

Python Training cost in Chennai | Best Python Training Institute in Velachery & OMR

Thanks for sharing the useful blog. This is very useful for me. Keep sharing more in the future blog.

IAS Academy in Chennai

IAS Academy in Anna Nagar

Top 10 IAS Coaching Centres in Chennai

Top IAS Academy in Chennai

Top IAS Coaching in Chennai

Just seen your Article, it amazed me and surpised me with god thoughts that eveyone will benefit from it. It is really a very informative post for all those budding entreprenuers planning to take advantage of post for business expansions. You always share such a wonderful articlewhich helps us to gain knowledge .Thanks for sharing such a wonderful article, It will be deinitely helpful and fruitful article.

Thanks

DedicatedHosting4u.com

Excellent machine learning blog,thanks for sharing...

Seo Internship in Bangalore

Smo Internship in Bangalore

Digital Marketing Internship Program in Bangalore

UV Gullas Medical CollegeGet MBBS in Abroad for low fees @ UV Gullas College of Medicine in Philippines. We are one of the best MBBS Universities in abroad.

Apply Now!

phd projects in chennaicenters provide for bulk best final year Cse, ece based IEEE me, mtech, be, BTech, MSC, mca, ms, MBA, BSC, BCA, mini, Ph.D., PHP, diploma project in Chennai for Engineering students in java, dot net, android, VLSI, Matlab, robotics, raspberry pi, python, embedded system, Iot, and Arduino . We are one of the leading IEEE project Center in Chennai.

Thank you so much for sharing this informative blog

data science interview questions pdf

data science interview questions online

data science job interview questions and answers

data science interview questions and answers pdf online

frequently asked datascience interview questions

top 50 interview questions for data science

data science interview questions for freshers

data science interview questions

data science interview questions for beginners

data science interview questions and answers pdf

Post a Comment