I've taken some publicly available fonts and extracted glyphs from them to make a

dataset similar to MNIST. There are 10 classes, with letters A-J taken from different fonts.

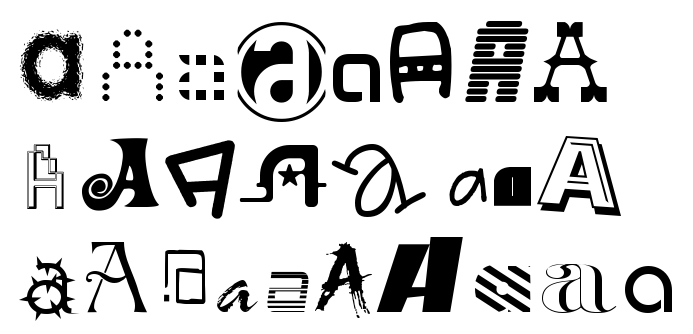

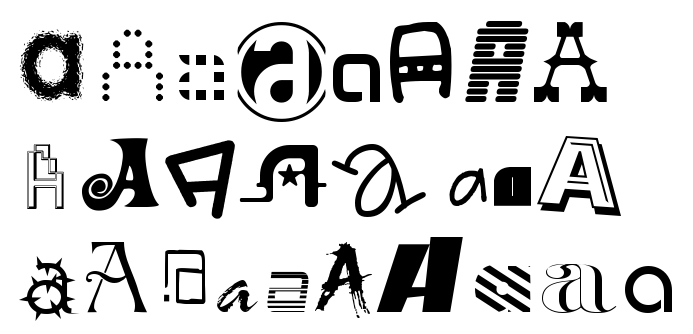

Here are some examples of letter "A"

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

load('notMNIST_small.mat')

for i=1:5

figure('Name',num2str(labels(i))),imshow(images(:,:,i)/255)

end

Zipped version is just a set of png images grouped by class. You can turn zipped version of dataset into Matlab version as follows

tar -xzf notMNIST_large.tar.gz

python matlab_convert.py notMNIST_large notMNIST_large.mat

Approaching 0.5% error rate on notMNIST_small would be very impressive. If you run your algorithm on this dataset, please let me know your results.

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

633 comments:

«Oldest ‹Older 601 – 633 of 633Highly recommend Power BI @ NearLearn for anyone looking to boost their career in data analytics and business intelligence.” https://nearlearn.com/courses/business-intelligence-visualization/power-business-intelligence-training-and-certification

If you are looking for the best place for PTE and IELTS coaching in Hyderabad, Punjagutta, FederPath is one of the top choices. FederPath Consultants is located at Khursheed Mansion, opposite to Punjagutta Metro Pillar No. 1108, above Medsys Pharmacy Mall, Hyderabad

If you are looking for the best place for PTE and IELTS coaching in Hyderabad, Punjagutta, FederPath is one of the top choices. FederPath Consultants is located at Khursheed Mansion, opposite to Punjagutta Metro Pillar No. 1108, above Medsys Pharmacy Mall, Hyderabad.

NIce information.Business intelligence services in Dubai

FTIR Spectro is a trusted Double Beam Spectrophotometer Manufacturer, delivering high-precision instruments designed for accurate analytical results in laboratories. Our advanced spectrophotometers ensure superior performance, durability, and reliability for research and industrial applications. As a leading manufacturer, FTIR Spectro focuses on innovation and quality to meet global standards in spectroscopic analysis.

📩 contact@ftirspectro.com | 📞 +91 9996186555

Discover CK Convention, the best , which provides excellent facilities at a reasonable cost. We come together with simplicity, comfort, and value to create memorable celebrations that are affordable within your budget, making us the best for weddings, parties, and corporate events.

NearLearn provides excellent training programs with real-world projects. The mentors are very supportive and knowledgeable.” https://nearlearn.com/python-classroom-training-institute-bangalore

I was diagnosed with Parkinson’s disease four years ago. After traditional medications stopped working, I tried a herbal treatment from NaturePath Herbal Clinic Within months, my tremors eased, balance improved, and I regained my energy. It’s been life-changing I feel like myself again. If you or a loved one has Parkinson’s, I recommend checking out their natural approach at [www.naturepathherbalclinic.com]. info@naturepathherbalclinic.com

Great article, very helpful and well-structured. Looking forward to more posts on machine learning from you.Android App UI/UX Design Experts in Dubai

Banquet Halls in Hyderabad for Engagement offer the perfect mix of tradition and modern elegance, making celebrations truly special. With spacious venues, décor flexibility, and premium services, they create unforgettable memories. Among the most thrilling decisions that have to be made when one starts life together is the engagement ceremony. This flawless banquet hall sets the entire mood for a wonderful memory to be etched in the hearts of the couple, their families, and guests. Being a city of rich culture and modern lifestyle has some exquisitely beautiful sites, but CK Convention remains one of those rare beautiful venues for engagements in Hyderabad.

So, here is a look at why CK Convention stands as the ideal option for your D-day, going through its benefits, and why couples put their faith in it for those once-in-a-lifetime celebrations.

Every point of information is very clear and concise. Thanks for sharing such information. Code++, Software Training Institute in Coimbatore

Discover the best homestay in Mukteshwar offering stunning mountain views, peaceful surroundings, and cozy rooms for a perfect getaway. Enjoy warm hospitality, delicious local food, and easy access to nearby attractions. Ideal for couples, families, and solo travelers seeking comfort, nature, and a memorable stay in the heart of Mukteshwar.

Searching for a top cosmetic gynecologist for hymenoplasty in Hyderabad? Contact La Picasso Cosmetic Gynaecology for a discreet and confidential cosmetic surgical experience.

Hymenoplasty, also known as hymenorrhaphy, is often referred to as ‘hymen repair surgery.’ This procedure involves the repair or reconstruction of the hymen, a delicate, pinkish membrane located near the vaginal entrance.

Searching for a top cosmetic gynecologist for Hymenoplasty in Hyderabad Contact La Picasso Cosmetic Gynaecology for a discreet and confidential cosmetic surgical experience.

Unlock exclusive insights and resources—explore more on

ONLEI Technologies Mediatoz

ONLEI Technologies TrustMyView

QnAspot

ONLEI Technologies AASoft

ONLEI Technologies Reviews

ONLEI Technologies Reviews

We are Marketing Enthusiastic with Expertise in the Medical and Healthcare niche. We Build your online reputation by creating an arch between you and your patients. We Deliver full-fledged Digital Medico

We craft ideas that aid Sky Rocket in your Reputation and Revenue. Our tailor-made Strategies Guarantee a Boost in your Digital Presence.

Discover CK Convention, the Banquet Hall in Hyderabad With Low Price which provides excellent facilities at a reasonable cost. We come together with simplicity, comfort, and value to create memorable celebrations that are affordable within your budget, making us the best for weddings, parties, and corporate events.

Mahatejarice

Mills was established in the year 2002 with a vision to provide quality rice grains to our customers. It is emerged as the leading brand within a short time by providing rice grains with premium quality.

In the 40+ years of journey, we never missed an opportunity to upgrade ourselves with novel milling and manufacturing processes. We are proud to say that we are pioneers in importing rice manufacturing equipment from Japan.

The quality of the rice grains, advanced milling process, efficient warehousing techniques, customer satisfaction has helped us emerge as a leading rice mill in Telugu-speaking states.

Mea Attestation in India is a mandatory process to authenticate documents for international use. It verifies the originality of educational, personal, and commercial certificates through the Ministry of External Affairs, ensuring legal acceptance abroad for employment, education, business, and visa purposes.

To be perfectly honest, a very strong online presence is the must-have of every business in today’s fast-paced digital world. As a matter of fact, customers have already made it a point to gather information about products and services on Google, social media, and other online resources prior to making a decision. In this situation, AV Digital Solutions, Digital Marketing Agency in Warangal offers companies the support by using their clever and efficient tactics that will not only help them but also increase their visibility.

Looking for a reliable LPG gas heater for fast and efficient warmth? An LPG gas heater offers instant heating, low energy consumption, and cost-effective performance. Ideal for homes and commercial spaces, it works efficiently during winters, ensures uniform heat distribution, and is easy to install and maintain.

Use a Canada Points Calculator for PR to instantly check your CRS score and eligibility for Canadian permanent residency. Calculate points based on age, education, work experience, language proficiency, and adaptability to plan your PR application with confidence and improve your chances of success.

avdigitalsolutions is a professional digital marketing agency based in Warangal. Our goal is simple – to help businesses succeed online. We believe that every brand is unique, and that is why we create customized marketing plans instead of using the same strategy for everyone.

Our team includes skilled digital marketers, designers, and content creators who understand the local market as well as modern digital trends. With the right mix of creativity and technology, we deliver results that matter.

If you want to partner with the Top Digital Marketing Agency in Warangal then AV Digital Solutions is your ideal option. With the provision of expert knowledge, the understanding of the local market, and the passion for digital growth, we lead businesses to real success on the web.

Labiaplasty in Hyderabad Every woman’s vulva is unique, and variations in labia size and shape are completely normal. However, some women may experience discomfort or self-consciousness due to elongated or uneven labia. Labiaplasty can address these concerns by reshaping or reducing the labia for both cosmetic and functional improvements.

In conclusion, Digital Drop Solutions stands out as the Best Creative Digital Marketing Agency of 2023” not only because of their innovative campaigns but also because of their family-like bond with their employees. Their journey to excellence serves as a model for businesses that value creativity, teamwork, and a shared vision as the keys to success in the digital marketing industry. Digital Drop Solutions is not just a company; it’s a living example of how unity and creativity can set you apart in the digital age.

There is no doubt that modern women are now very much aware of their health and self-assurance. Female cosmetic genital surgery in Hyderabad is one of the areas that is gaining visibility, which is a combination of aesthetic precision and medical science. The reasons for women considering these treatments are numerous, and among them are childbirth, aging, and personal preference. The primary aim of such operations is to give women comfort, beauty, and most importantly, the feeling of being confident once more.

In the fast-paced and competitive digital world of today, just having a website or a social media page is no longer sufficient. Online presence, lead generation, and sales are inconsistent in Warangal City’s businesses. If you are on the lookout for a Best Digital Marketing Near Me in Warangal City, then AV Digital Solutions is here to give you confidence and credibility in your online business through result-driven strategies.

FTIR Spectro is a trusted Hematology Analyzer Manufacturer, delivering high-precision, reliable, and cost-effective diagnostic solutions for hospitals, laboratories, and research centers worldwide.

📩 contact@ftirspectro.com | 📞 +91 9996186555

As long as there is a digital world, just having a website will not be enough for companies to stay at the top of their game. They need to be accessible and visible to customers at all stages of the process—searching, inquiring, and making decisions. This is the area where AV Digital Solutions, a reputable supplier of the Best SEO Services in Warangal, assists companies in reaping online growth through smart, ethical, and performance-driven strategies.

Rice ranks among the critical food staples of the UAE, which is loved by every community. The demand for good-quality rice has increased steadily over the years due to its multicultural population and the growing food industry. In order to satisfy this requirement, numerous companies rely on reputable best wholesale rice suppliers in UAE for high-quality rice in bulk. One of these is Manjusree General Trading, which is well-known for its reliability, cost-effectiveness, and excellent service and, thus, has become a prominent name among them.

Vaginoplasty Surgery Cost in Hyderabad is something you seek? La Picasso Cosmetic Gynaecology offers expert care, advanced techniques, and discreet service all at a cost quite competitive. Restore your confidence and comfort through personalized treatments rendered by the leading specialists in cosmetic gynaecology.

Hymenoplasty in Hyderabad

Searching for a top cosmetic gynecologist for hymenoplasty in Hyderabad? Contact La Picasso Cosmetic Gynaecology for a discreet and confidential cosmetic surgical experience.

Hymenoplasty, also known as hymenorrhaphy, is often referred to as ‘hymen repair surgery.’ This procedure involves the repair or reconstruction of the hymen, a delicate, pinkish membrane located near the vaginal entrance.

Post a Comment