A discussion came up on Guido von Rossum's Google Plus post. It comes down to the fact that 2.1 is not exactly represented as a floating point number. Internally it's 2.0999999999999996, and this causes unexpected behavior.

These kinds of issues often come up. The confusion is caused by treating floating point numbers as exact numbers, and expecting calculations with them to produce results meaningful down to the last bit.

It is not in any way "solvable", at least not by means accessible to us (which in some sense defines it as "not a problem"). Depends too much on alignment-handling vagaries of MKL libraries, ordering of operations in BLAS, and usage, or not, of extended precision registers. See also IEEE 754: a careful reading may shed light on how different results for the same computation can arise in compliant hardware/software, even on the same machine

In addition to ambiguity in IEEE 754, which gives slight differences between different floating point libraries, there's an issue of the same library giving slightly different results on reruns with same inputs and and same machine, because of run-time optimization by the processor

The solution that Wolfram Inc came up with is to treat the last 7 bits of IEEE doubles as unknown. When testing for equality, those bits are ignored. When printing a number, it chooses representation that gives a nice printout. For instance, Print[2.0999999999999996] will display 2.1

So here's the rule of thumb for IEEE 754 floating point numbers:

When checking for equality of floating point doubles, ignore the last 7 bits of the mantissa.

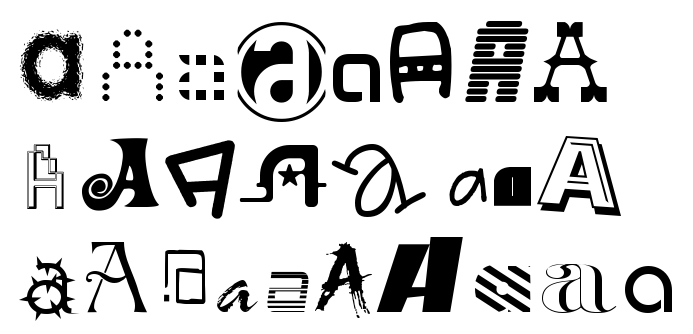

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows:

Judging by the examples, one would expect this to be a harder task than MNIST. This seems to be the case -- logistic regression on top of stacked auto-encoder with fine-tuning gets about 89% accuracy whereas same approach gives got 98% on MNIST.

Dataset consists of small hand-cleaned part, about 19k instances, and large uncleaned dataset, 500k instances. Two parts have approximately 0.5% and 6.5% label error rate. I got this by looking through glyphs and counting how often my guess of the letter didn't match it's unicode value in the font file.

Matlab version of the dataset (.mat file) can be accessed as follows: