Foundations of probabilistic inference is often a subject of much disagreement, with some leading Bayesian sometimes going as far as to

say that MaxEnt method doesn't make any sense, and the MaxEnt camp

picking on the issue of subjectivity.

The way I see it, MaxEnt and Bayesian approaches are just different ways of using external information to pick a probability distribution.

With Bayesian approach, you are given data and a prior. Bayesian inference then comes out as a natural extension of logic to probabilities. See Ch.1 of Cox's

Algebra of Probable Inference for full axiomatic derivation.

With MaxEnt approach, you are given a set of valid probability distributions. You can then justify MaxEnt

axiomatically but personally I like the motivation given in Jaynes book,

Probability Theory: The Logic of Science (in particular

Ch.11).

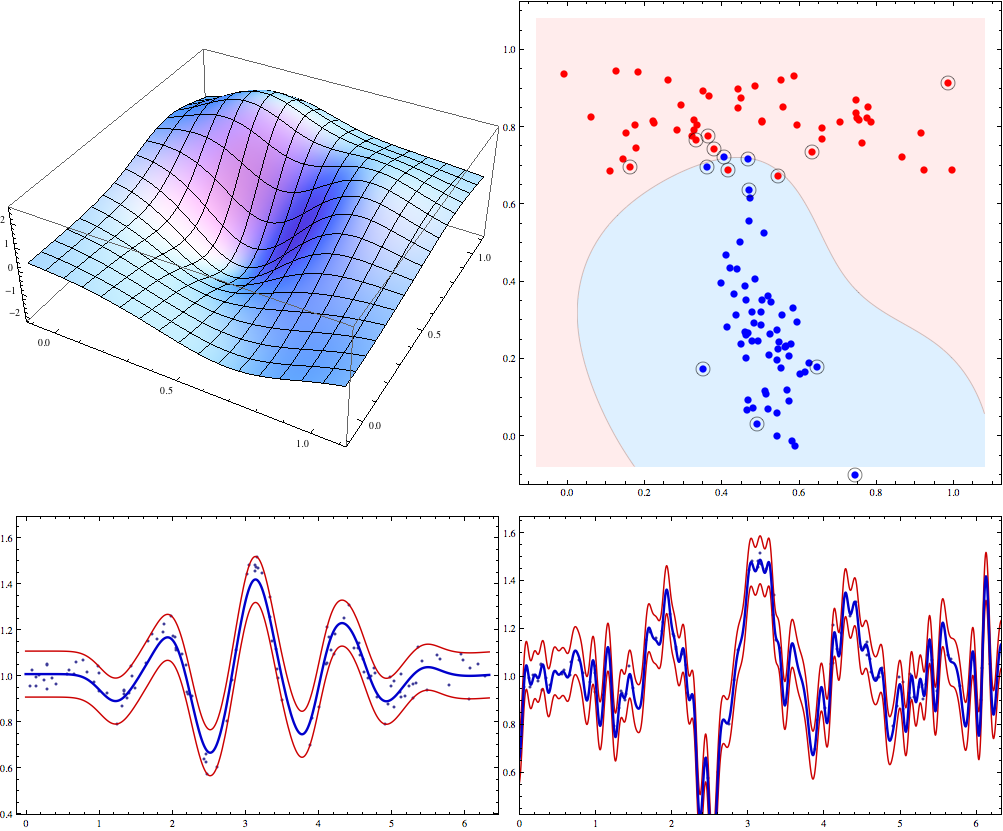

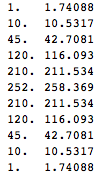

Roughly speaking, it goes as follows. Suppose we have a distibution $p$ over $d$ outcomes. We say that a sequence of $n$ observations is explained by distribution $p$ if relative counts of outcomes in this sequence match $p$ exactly. The number of sequences matched by $p$ is the following quantity

$$c(p)=\frac{n!}{(np_1)!(np_2)!\cdots (np_d)!}$$

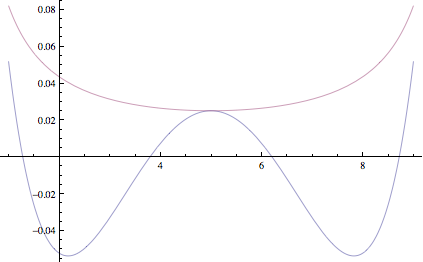

If we have a set of valid distributions $p$, it then makes sense to pick the one with highest $c(p)$ because it'll fit the greatest number of possible future datasets. Approximating $n!$ by $n^n$ we get the following

$$\frac{1}{n}\log c(p)\approx \frac{1}{n}\log \frac{n^n}{(np_1)^{np_1}(np_2)^{np_2}\cdots (np_d)^{np_d}}=H(p)$$

Hence picking distribution with highest H(p) usually means picking the distribution that will be able to explain the most possible observation sequences. Because it's an approximation, there can be exceptions, for instance distributions $(\frac{12}{18},\frac{3}{18},\frac{3}{18})$ and $(\frac{9}{18},\frac{8}{18},\frac{1}{18})$ have equal entropy, but the latter explains 18% more length 18 sequences.

To carry out the inference, Bayesian approach needs to start with a prior, whereas MaxEnt approach needs to start with a set of valid distributions. In practice people often use constant function for prior, and "distributions for which expected feature counts match observed feature counts" for the valid set of distributions.

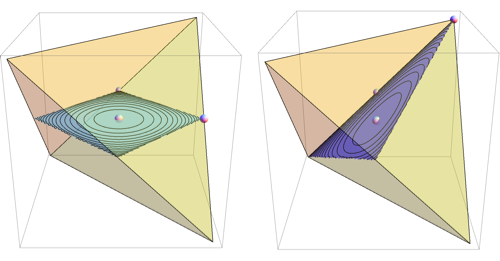

Note that when dealing with continuous distributions, result of MaxEnt inference depends on the choice of measure over our set of distributions, so I think it's hard to justify in continuous case.

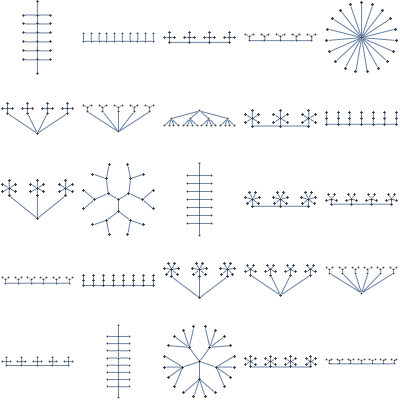

Here are some papers I scanned a

while back on foundations of Bayesian and MaxEnt approaches.